Prerequisites

Docker installed and running on your machine.About workers

In the previous section of the tutorial, you learned how:- work pools are a bridge between the Prefect orchestration layer and infrastructure for flow runs, and can be dynamically provisioned.

- to transition from persistent infrastructure to dynamic infrastructure with

flow.deployinstead offlow.serve.

Set up a work pool and worker

For this tutorial you will create a Docker type work pool through the CLI. Using the Docker work pool type means that all work sent to this work pool runs within a dedicated Docker container using a Docker client available to the worker.Create a work pool

To set up a Docker type work pool, run:my-docker-pool listed in the output.

Next, check that you can see this work pool in your Prefect UI.

Navigate to the Work Pools tab and verify that you see my-docker-pool listed.

When you click into my-docker-pool, you should see a red status icon signifying that this work pool is not ready.

To make the work pool ready, start a worker.

Start a worker

Workers are a lightweight polling process that kick off scheduled flow runs on a specific type of infrastructure (such as Docker). To start a worker on your local machine, open a new terminal and confirm that your virtual environment hasprefect installed.

Run the following command in this new terminal to start the worker:

Ready status indicator on your work pool.

Keep this terminal session active for the worker to continue to pick up jobs.

Since you are running this worker locally, the worker will terminate if you close the terminal.

In a production setting this worker should run as a daemonized or managed process.

Next, deploy your tutorial flow to my-docker-pool.

Create the deployment

From the previous steps, you now have:- A flow

- A work pool

- A worker

repo_info.py file to build a Docker image, and update your deployment so your worker can execute it.

Make the following updates in repo_info.py:

- Change

flow.servetoflow.deploy. - Tell

flow.deploywhich work pool to deploy to. - Tell

flow.deploythe name for the Docker image to build.

repo_info.py looks like this:

repo_info.py

Why the

push=False?For this tutorial, your Docker worker is running on your machine, so you don’t need to push the image built by flow.deploy to a registry. When your worker is running on a remote machine, you must push the image to a registry that the worker can access.Remove the push=False argument, include your registry name, and ensure you’ve authenticated with the Docker CLI to push the image to a registry.DockerfileIn this example, Prefect generates a Dockerfile for you that builds an image based on one of Prefect’s published images. The generated Dockerfile copies the current directory into the Docker image and installs any dependencies listed in a

requirements.txt file.To use a custom Dockerfile, specify the path to the Dockerfile using the DeploymentImage class:repo_info.py

Modify the deployment

To update your deployment, modify your script and rerun it. You’ll need to make one update to specify a value forjob_variables to ensure your Docker worker can successfully execute scheduled runs for this flow. See the example below.

The job_variables section allows you to fine-tune the infrastructure settings for a specific deployment. These values override default values in the specified work pool’s base job template.

When testing images locally without pushing them to a registry (to avoid potential errors like docker.errors.NotFound), we recommend including an image_pull_policy job_variable set to Never. However, for production workflows, push images to a remote registry for more reliability and accessibility.

Set the image_pull_policy as Never for this tutorial deployment without affecting the default value set on your work pool:

repo_info.py

Learn more

- Learn how to configure deployments in YAML with

prefect.yaml. - Guides provide step-by-step recipes for common Prefect operations including:

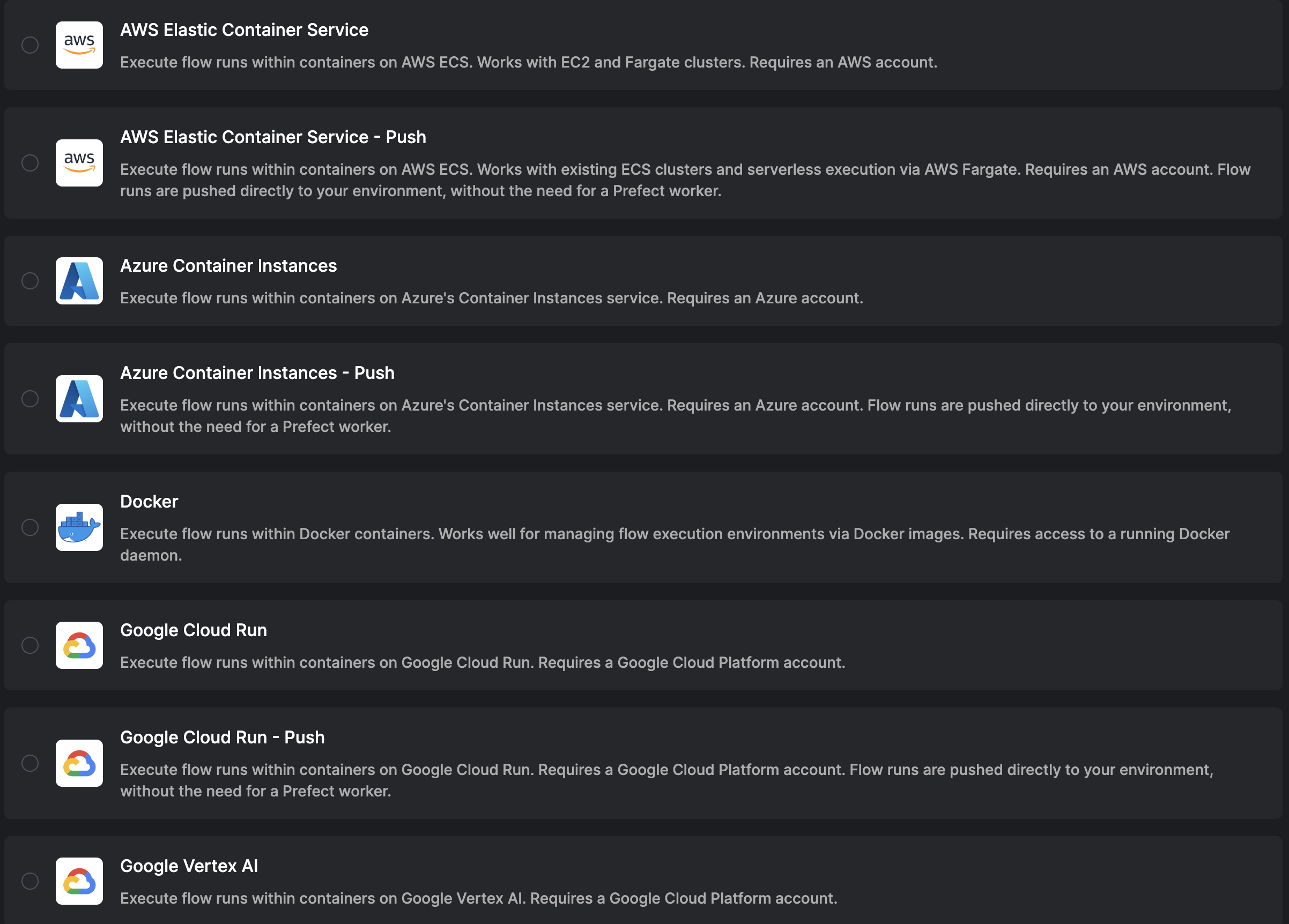

Run Deployments on Serverless Infrastructure with Prefect Workers

Prefect provides hybrid work pools for workers to run flows on the serverless platforms of major cloud providers. The following options are available:- AWS Elastic Container Service (ECS)

- Azure Container Instances (ACI)

- Google Cloud Run

- Google Cloud Run V2

- Google Vertex AI

- Create a work pool that sends work to your chosen serverless infrastructure

- Deploy a flow to that work pool

- Start a worker in your serverless cloud provider that will poll its matched work pool for scheduled runs

- Schedule a deployment run that a worker will pick up from the work pool and run on your serverless infrastructure

- AWS ECS guide in the

prefect-awsdocs - Azure Container Instances guide

- Google Cloud Run guide in the

prefect-gcpdocs. - For Google Vertex AI, follow the Cloud Run guide, substituting Google Vertex AI where Google Cloud Run is mentioned.

Steps

- Make sure you have an user or service account on your chosen cloud provider with the necessary permissions to run serverless jobs

- Create the appropriate serverless work pool that uses a worker in the Prefect UI

- Create a deployment that references the work pool

- Start a worker in your chose serverless cloud provider infrastructure

- Run the deployment

Next steps

Options for push versions on AWS ECS, Azure Container Instances, and Google Cloud Run work pools that do not require a worker are available with Prefect Cloud. Read more in the Serverless Push Work Pool Guide. Learn more about workers and work pools in the Prefect concept documentation. Learn about installing dependencies at runtime or baking them into your Docker image in the Deploying Flows to Work Pools and Workers guide.Daemonize Processes for Prefect Deployments

When running workflow applications, it can be helpful to create long-running processes that run at startup and are robust to failure. In this guide you’ll learn how to set up a systemd service to create long-running Prefect processes that poll for scheduled flow runs. A systemd service is ideal for running a long-lived process on a Linux VM or physical Linux server. We will leverage systemd and see how to automatically start a Prefect worker or long-livedserve process when Linux starts.

This approach provides resilience by automatically restarting the process if it crashes.

In this guide we will:

- Create a Linux user

- Install and configure Prefect

- Set up a systemd service for the Prefect worker or

.serveprocess

Prerequisites

- An environment with a linux operating system with systemd and Python 3.9 or later.

- A superuser account (you can run

sudocommands). - A Prefect Cloud account, or a local instance of a Prefect server running on your network.

- If daemonizing a worker, you’ll need a Prefect deployment with a work pool your worker can connect to.

sudo yum install -y python3 python3-pip.

Step 1: Add a user

Create a user account on your linux system for the Prefect process. While you can run a worker or serve process as root, it’s good security practice to avoid doing so unless you are sure you need to. In a terminal, run:prefect account.

Next, log in to the prefect account by running:

Step 2: Install Prefect

Run:prefect application in the bin subdirectory of your virtual environment.

Next, set up your environment so that the Prefect client will know which server to connect to.

If connecting to Prefect Cloud, follow the instructions to obtain an API key and then run the following:

exit command to sign out of the prefect Linux account.

This command switches you back to your sudo-enabled account so you will can run the commands in the next section.

Step 3: Set up a systemd service

See the section below if you are setting up a Prefect worker. Skip to the next section if you are setting up a Prefect.serve process.

Setting up a systemd service for a Prefect worker

Move into the/etc/systemd/system folder and open a file for editing.

We use the Vim text editor below.

Setting up a systemd service for .serve

Copy your flow entrypoint Python file and any other files needed for your flow to run into the /home directory (or the directory of your choice).

Here’s a basic example flow:

my_file.py

flow.from_source().serve(), as in the example below.

my_remote_flow_code_file.py

/etc/systemd/system folder and open a file for editing.

We use the Vim text editor below.

Save, enable, and start the service

To save the file and exit Vim hit the escape key, type:wq!, then press the return key.

Next, run sudo systemctl daemon-reload to make systemd aware of your new service.

Then, run sudo systemctl enable my-prefect-service to enable the service.

This command will ensure it runs when your system boots.

Next, run sudo systemctl start my-prefect-service to start the service.

Run your deployment from UI and check out the logs on the Flow Runs page.

You can see if your daemonized Prefect worker or serve process is running and see the Prefect logs with systemctl status my-prefect-service.

That’s it!

You now have a systemd service that starts when your system boots, and will restart if it ever crashes.